Observability at GoCardless: a tale of API performance improvement

Last editedApr 20233 min read

At GoCardless we adopt observability best practices to operate our systems effectively and make the right data-driven decisions.

In this post we’ll describe how we recently identified and fixed a performance problem in our public API through the instrumentation tools we use every day.

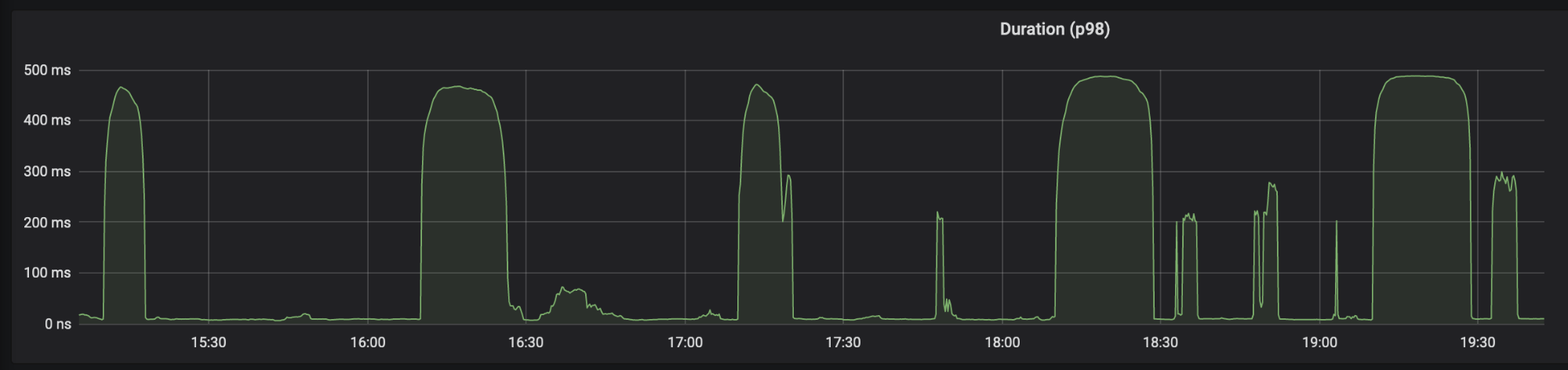

What we saw

We serve our public API from a Rails application and monitor request latency using Prometheus. We use quite a standard setup, with every single endpoint handler wrapped by an instrumentation layer which measures the execution time and stores it into an histogram metric, then we use Grafana for querying and visualization of such data.

At some point we noticed an inexplicable behaviour with some POST requests: a low traffic endpoint that normally executes within less than 10 milliseconds would suddenly spike up to 400 milliseconds when hit more heavily.

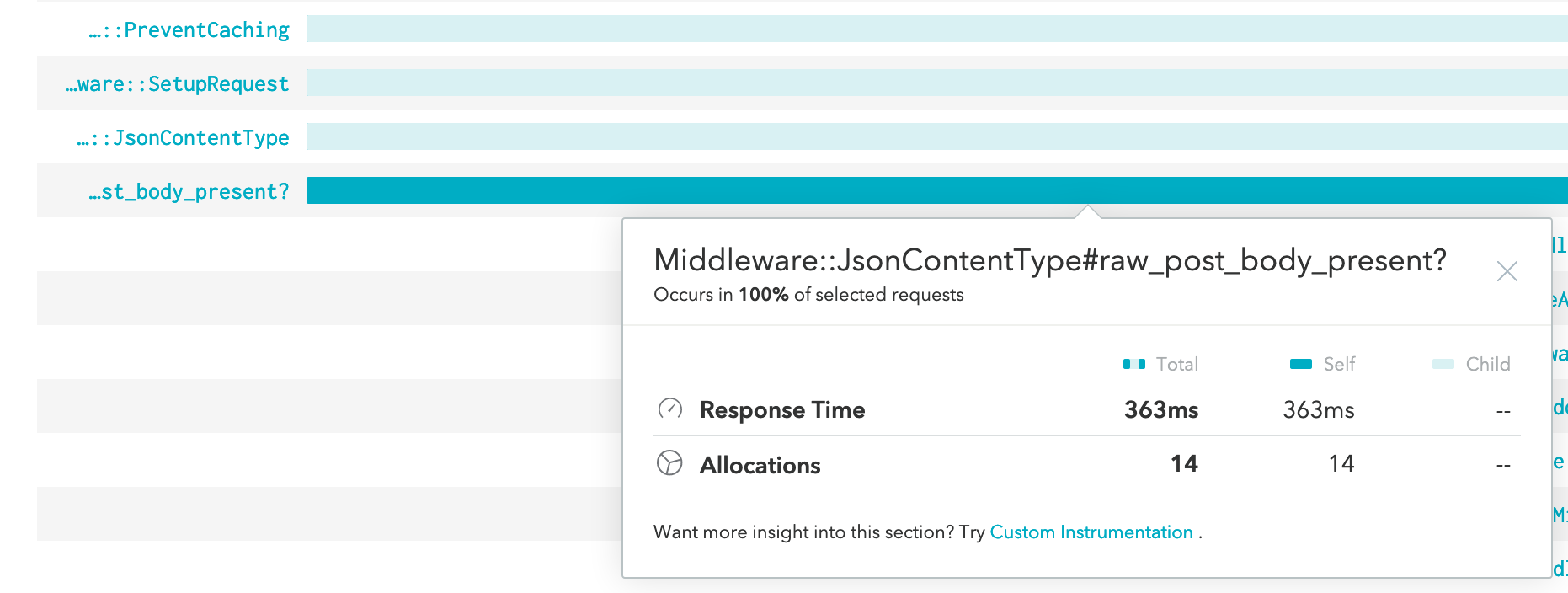

At first, we thought our code was doing something odd which could cause poor performance under higher loads. We use Skylight to profile our application in production, so we quickly introduced some ad-hoc method instrumentation: we wanted to specifically measure the execution time of some methods within one of our Coach middlewares.

Instrumentation highlighted that when request latency skyrocketed the application was spending the majority of its time reading the request body. We were surprised: data showed that all requests within a given timeframe were about the same size in bytes, so we couldn’t understand why some of them were so much slower than others.

We took a step back and carefully analysed application logs in search for a pattern, until we finally noticed that all those slow requests included an “Expect: 100-continue” HTTP header.

We know HTTP, don’t we?

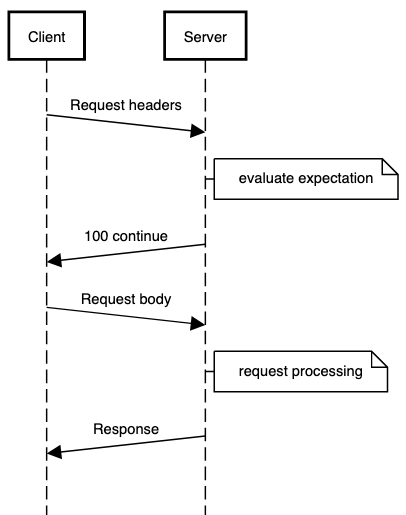

The most common use case for the “Expect” header is when an HTTP client wants to inform the server that they’re about to send a presumably large request body. The client will only send request headers and wait for the server to evaluate whether the expectation can be fulfilled or not. If the server responds with a “100 Continue” HTTP code, the client will then continue sending the request body.

The “Expect” header is not used by any common browser, but can be used by clients such as Curl. It breaks the classic request-response cycle of HTTP/1.1 and implies multiple communication round trips: the client will send a partial request, wait for a 100 status code, discard it and resume sending the request payload; whereas the server initially receives only the request headers, sends out a partial response indicating whether it accepts (status 100) or rejects (status 417 or any 4xx) the request, then (if accepted) receives the request body and processes it as normal.

A server might decide to reject the request if the client expectation cannot be met: for example, if the Content-Length is too large it can respond with a “413 Request Entity Too Large” and avoid an unnecessary data transfer. In general, the server might be able to handle any error condition that can be detected by merely looking at request headers (e.g. missing Authorization header or invalid HTTP method). In practice, many server implementations either respond with “100 Continue” straight away or don’t implement this protocol feature at all.

Our app setup

Let’s go back to the problem of slow requests. We mentioned above that our public API is served by a Rails application. We use Unicorn as a Rack server, and shield it behind a reverse proxy for better efficiency.

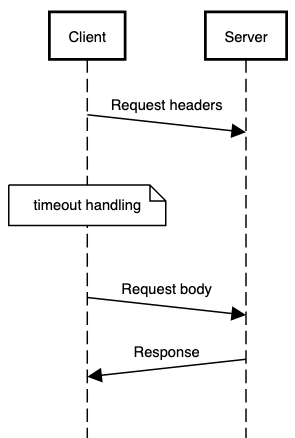

Why would requests with an “Expect: 100-continue” header be so slow? Turns out we didn’t know that Unicorn delegates to the application itself deciding whether the client expectations can be fulfilled or not (and so does the reverse proxy as well). So our application was not handing the Expect header correctly. But, wait, shouldn’t client requests hang on forever if the server is not acting upon them? (not really forever, but up to the maximum execution timeout defined in our setup)

The Expect mechanism is not widely implemented in HTTP servers and proxies. Clients that use this feature are generally wise enough to set a timeout after which the request body is sent out anyway. For example, curl uses a configurable timeout of 1 second.

At this point, we went back to analyse the request logs and found the culprit. We noticed the majority of those slow requests were issued by the .NET client library we provide to our users. And the underlying default HTTP client in .NET corefx sets an Expect timeout of 350 milliseconds, which explained why we were observing latencies of up to 400 milliseconds.

Wrap up

We’ve since fixed the way we handle the “Expect” header in our Rails application by implementing a thin Rack middleware that inspects the request headers to decide whether to accept or reject a request. We’ve gained a better understanding of Unicorn, the application server we use and love, which is solid and flexible but sometimes under-documented.

We’ve updated our client libraries as well, so make sure you always use the most recent version available!

At GoCardless we’re building the first global network for recurring payments. In this article we’ve described how we implement observability by using a wide array of different tools to correlate diagnostic signals across our stack. We’re passionate about building a reliable and performant infrastructure, if you are too come join us.