Here's your change

All the news and resources you need to take the pain out of payments for your business.

Forrester Consulting: Rethink Your Payment Strategy To Save Your Customers And Bottom Line

Discover more about the state of recurring payments across the globe in this exclusive report.

Latest articles

View all![[Webinar] Introduction to GoCardless for football clubs](https://images.ctfassets.net/40w0m41bmydz/4PL2Zst5lT2l6ivbyGSDGa/c25022ab735e86b3928af3a6bfa1aab4/customer-stories-hub-hero-hemmington_3x.png?w=680&h=680&q=50&fm=png)

Featured articles

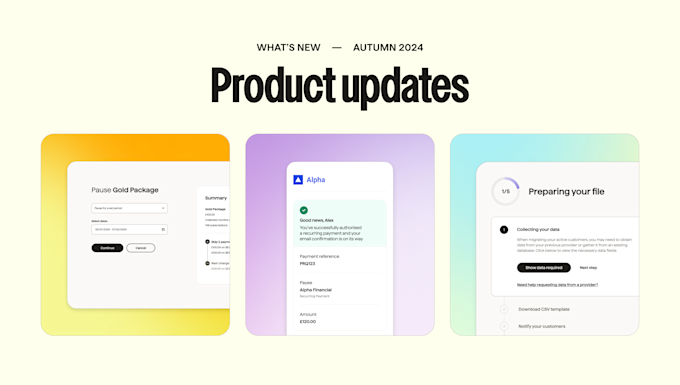

See what improvements we’ve made this autumn

Browse by category

Insight into how the GoCardless Engineering team is improving recurring payments globally.

The latest updates from GoCardless, with information on our newest products and events we’re attending.

Practical payment advice for accounting and advisory firms to help them empower their clients.

Recurring payments and Bank Debit expertise tailored to the world’s biggest subscription and invoicing businesses.

Expanding your business internationally? Here's everything you need to know about payments.

Everything you need to know about sending and receiving invoices, and improving your processes.

Read how Direct Debit is an easy, secure & convenient way to automate payment collection.

Popular downloads

![[Report] Global payment preferences for recurring B2B purchases](https://images.ctfassets.net/40w0m41bmydz/4fg6rX8ZuFVOU78XgCwo2k/70243c578b7edeb8083c457101198e8e/Card_Carousel-Chargebee-G2-s_CEO_Consult_Webinar.jpg?w=680&h=410&fl=progressive&q=50&fm=jpg)

We surveyed 4,990 businesses across 9 markets to determine which payment methods businesses prefer for different use cases.

We've collected together advice on churn from some of the world’s most successful and outspoken investors and SaaS C-suite executives.

Your comprehensive resource for understanding the challenges and opportunities that Strong Customer Authentication (SCA) presents for global subscription businesses.

Popular guides

Reference guides

Everything you wanted to know about Direct Debit.

A complete guide to invoicing and invoices.

A guide to major local payment methods in Europe.

Online payments for businesses: a complete guide.

Everything businesses need to know about SCA.

How to get your customers to pay by Direct Debit.

A detailed guide to Direct Debit in Australia.

A user guide for Direct Debit in New Zealand.

How to take ACH payments from customers in the US.

The complete guide to Direct Debit in Canada.

Find out how to create an effective RFP.

Online Direct Debit resources for accountants