Incident review: API and Dashboard outage on 10 October 2017

Last editedJun 202411 min read

This post represents the collective work of our Core Infrastructure team's investigation into our API and Dashboard outage on 10 October 2017.

As a payments company, we take reliability very seriously. We hope that the transparency in technical write-ups like this reflects that.

We have included a high-level summary of the incident, and a more detailed technical breakdown of what happened, our investigation, and changes we've made since.

Summary

On the afternoon of 10 October 2017, we experienced an outage of our API and Dashboard, lasting 1 hour and 50 minutes. Any requests made during that time failed, and returned an error.

The cause of the incident was a hardware failure on our primary database node, combined with unusual circumstances that prevented our database cluster automation from promoting one of the replica database nodes to act as the new primary.

This failure to promote a new primary database node extended an outage that would normally last 1 or 2 minutes to one that lasted almost 2 hours.

Our database setup

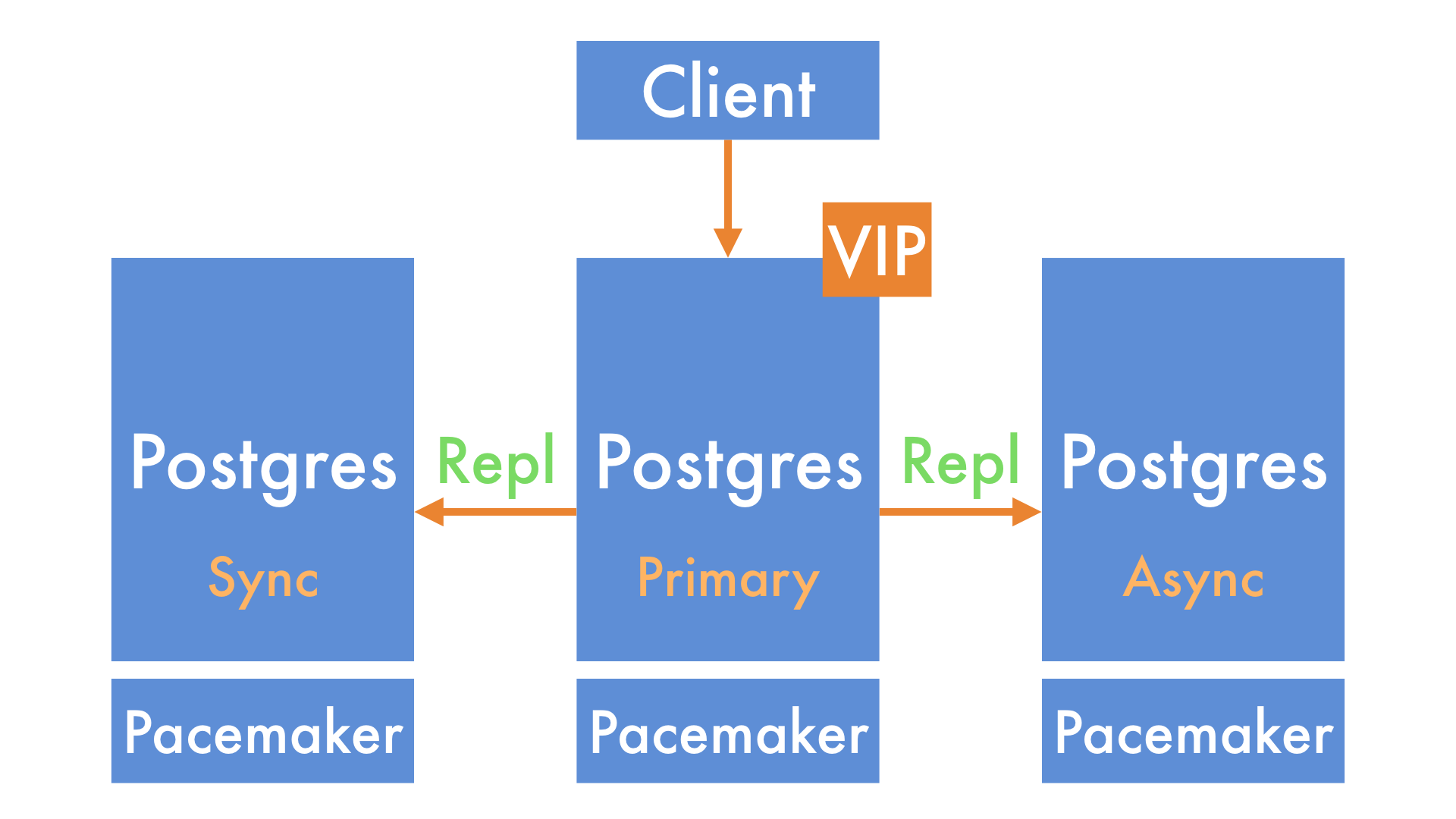

Before we start, it's helpful to have a high-level view of how we store data at GoCardless.

All of our most critical data1 is stored in Postgres.

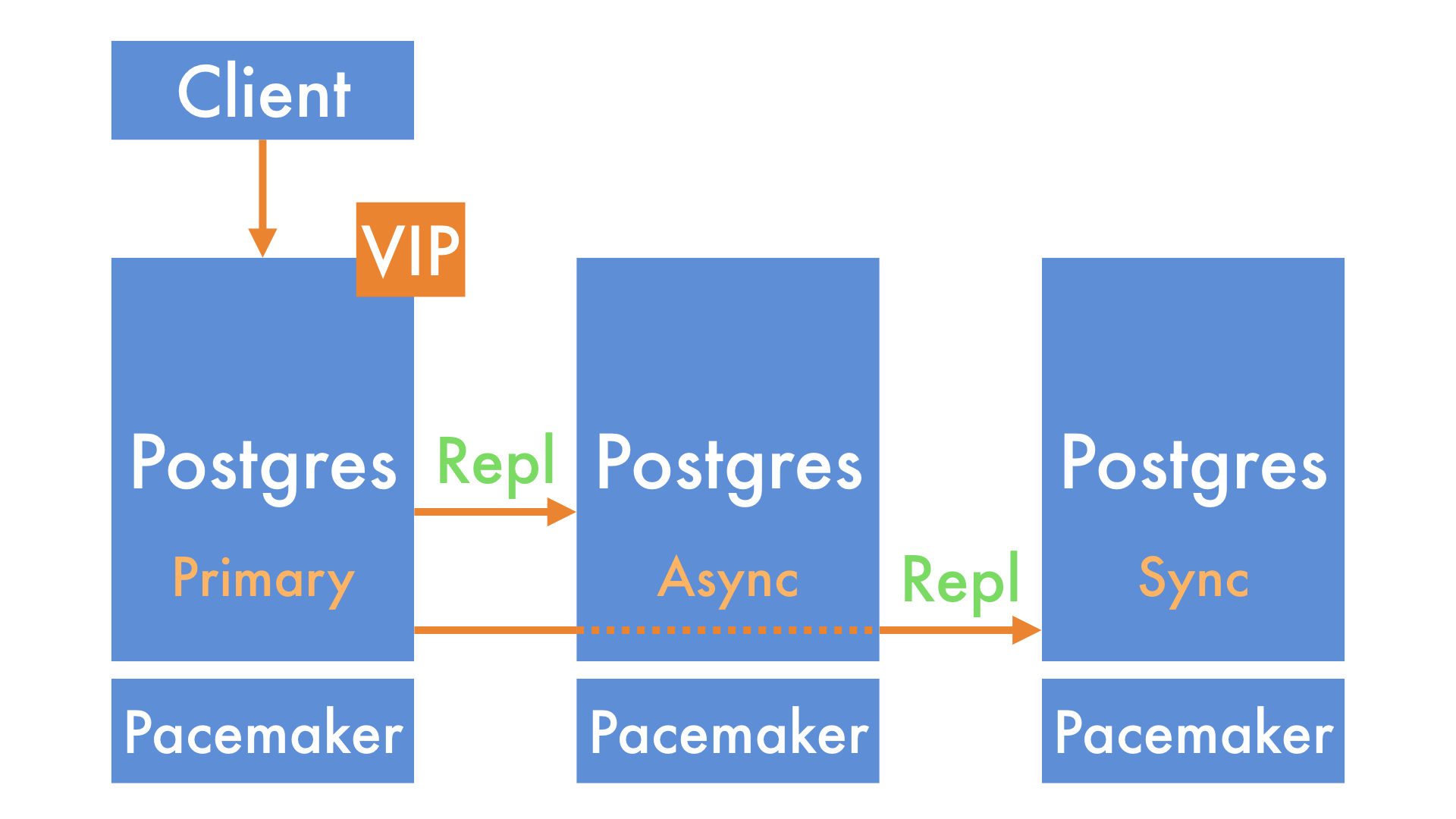

We run Postgres in a cluster of 3 nodes, with a primary, 1 synchronous replica and 1 asynchronous replica. This means that we always have at least 2 copies of every piece of data by the time we respond successfully to an API request.

To manage the promotion of a new primary node in the event of machine failure, we run a piece of software called Pacemaker on each node in the cluster. Clients, such as our Ruby on Rails applications, connect to the primary node2 using a virtual IP address (VIP) that is also managed by Pacemaker.

Put together, it looks a little like this:

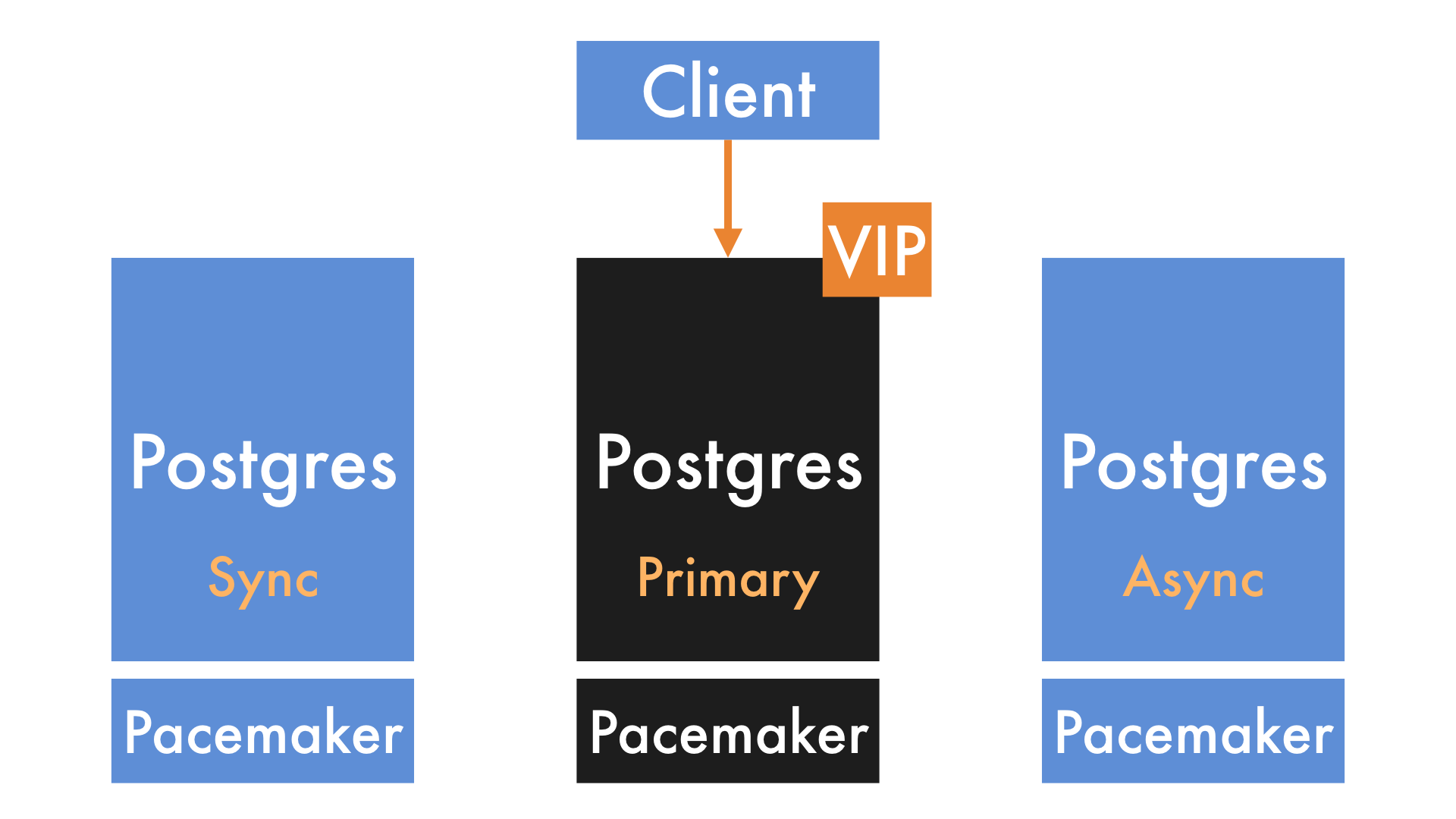

When the primary node fails, the cluster notices.

It promotes the synchronous replica, which is guaranteed to have a copy of every write (e.g. new payment) that the primary accepted. It also sets up the old asynchronous replica as the new synchronous replica.

Once the VIP is moved across, applications can carry on their work.

A Site Reliability Engineer (SRE) then adds a new replica back into the cluster.

Incident timeline

So, how did all this go wrong?

All times in this section are in British Summer Time (UTC+1).

15:09: Our monitoring detects the total outage of our API and Dashboard; engineers begin to investigate.

15:11: We see evidence of a disk array failure on the primary. We are unsure why the cluster hasn't already failed over to the synchronous replica.

15:17: We power off the broken primary Postgres node. This is done so that we don't have a machine in the cluster that is online, but with a broken disk array. We believe that with only the synchronous and asynchronous nodes online, the cluster software will promote a new primary. It quickly becomes clear that this is not the case.

15:18: We clear the error counts (

crm resource cleanup) in Pacemaker to prompt it to rediscover the state of the Postgres instances. Doing so has no effect. The cluster will not promote the synchronous replica.We spend the next hour trying a variety of approaches to promote a new primary.

Our last few attempts centre around editing the configuration on the synchronous replica to try to promote it ourselves. We put the Pacemaker cluster into maintenance mode (

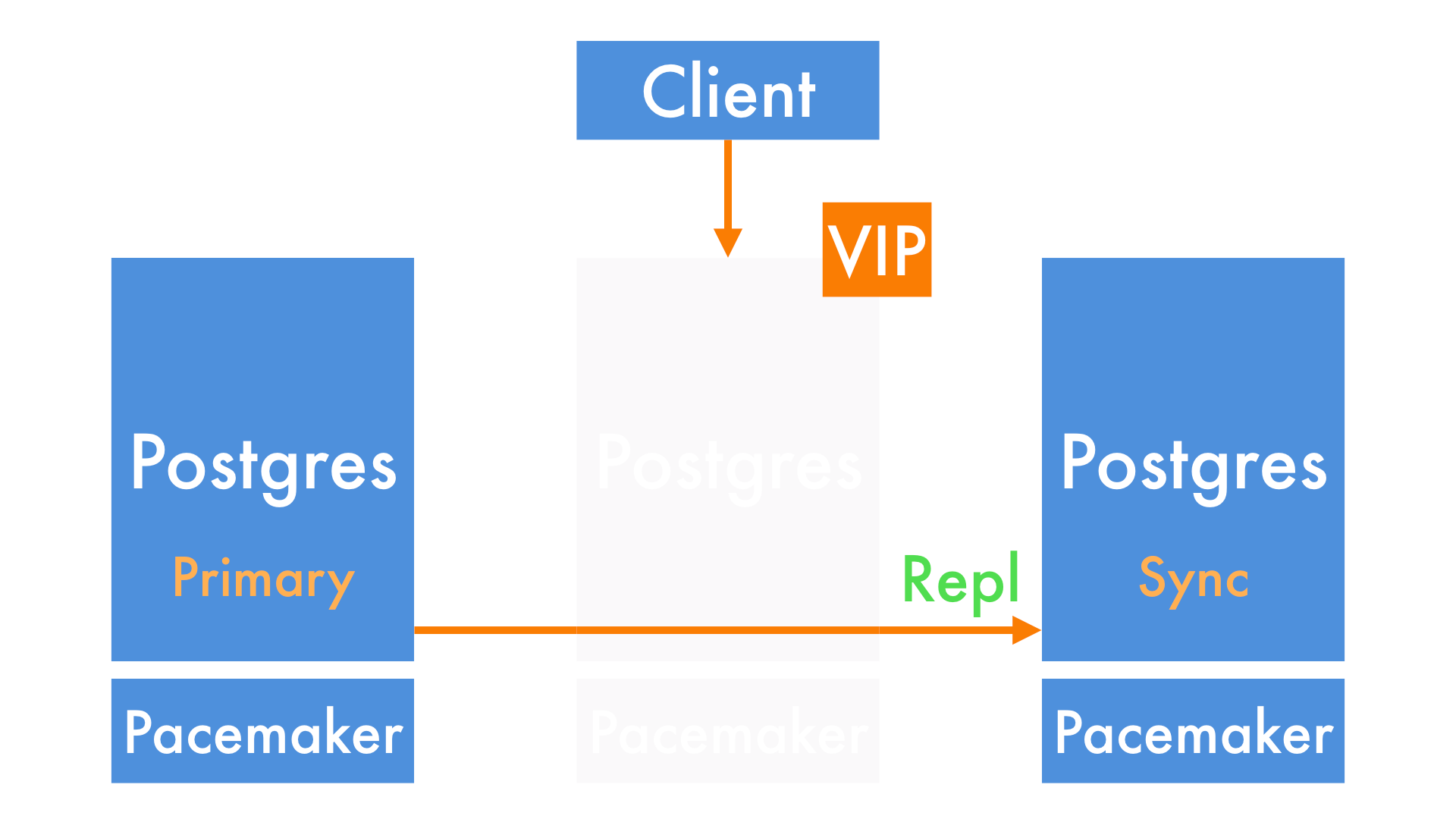

crm configure property maintenance-mode=true), remove the configuration flag that tells Postgres to be a replica fromrecovery.conf, and bring the cluster out of maintenance mode. Every time, it brings the replica back with its originalrecovery.conf, and we are left with no primary.16:18: We decide that our attempts at this approach have run on for too long, and that we need to try something else. We set the cluster into maintenance mode one last time, configure the synchronous replica to be a primary, and start Postgres ourselves.

Since the cluster also manages the VIP, and we've set it into maintenance mode, we reconfigure our backend applications to connect to the actual IP address of the new primary.

16:46: Our manually promoted primary Postgres node is working, and the configuration changes are being rolled out to our applications.

16:59: Our monitoring systems confirm that our API and Dashboard are back up.

The immediate fallout

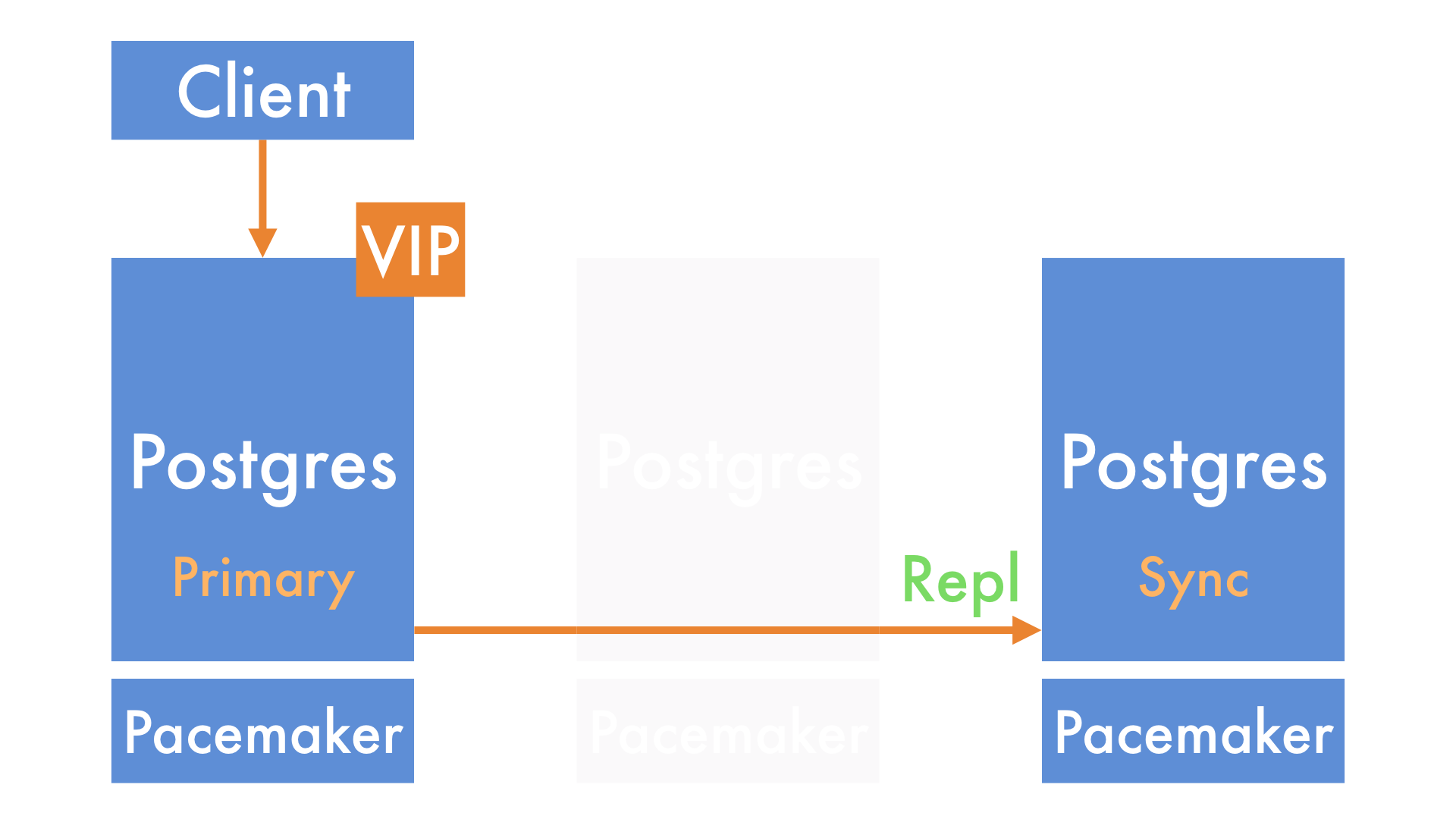

Having brought our systems back online, our next priority was to restore the database cluster to its usual level of redundancy. This meant bootstrapping a third node as an asynchronous replica.

Since Pacemaker was still in maintenance mode, there would be no automatic failover if a machine were to fail now. We believed we were likely to be running this manually-managed setup for a while3, and in the event of our primary failing, we wanted to be able to promote the synchronous replica as quickly as possible.

We decided to introduce another VIP, to be managed manually by the infrastructure team. In the event of our primary failing, we would promote the synchronous replica and move the VIP over ourselves.

As this was an incident triggered by a disk array failure, out of caution we spent some time verifying the integrity of our data. After running every test we could think of, we found no evidence of data corruption.

Once that was done and we felt safe with the GoCardless services running as they were, we started planning our next steps.

The following weeks

The day after the incident, the whole team sat down to discuss two issues:

Why did our Pacemaker cluster fail to elect a new primary database node?

How do we move back to having Pacemaker managing our cluster, now that we're in this manually-managed state?

During that discussion, we decided that we'd taken too much manual intervention on our existing database cluster to be confident in bringing back the Pacemaker automation there. We decided that we'd provision a new cluster, replicate data into it, and switch traffic over.

We split up into two subteams - one trying to reproduce the failure, and the other working out how we'd move over to a new database cluster with minimal disruption.

Reproducing the failure

To be confident in a new cluster, we needed to understand why the existing one didn't promote a new primary, and make changes to fix that issue.

For the most part, we did this by analysing the logs of the components involved in the failure. From that analysis, we pulled out several factors that looked like they could be relevant to reproducing the issue:

The RAID controller logged the simultaneous loss of 3 disks from the array. All subsequent read and write operations against it failed.

The Linux kernel set the filesystem backed by that controller into read-only mode. Given the state of the array, even reads weren't possible.

The Pacemaker cluster correctly observed that Postgres was unhealthy on the primary node. It repeatedly attempted to promote a new primary, but each time it couldn't decide where that primary should run.

On the synchronous replica - the one that should have become the new primary - one of Postgres's subprocesses crashed around the time of the disk array failure on the primary. When this happens, Postgres terminates the rest of its subprocesses and restarts.

After that restart, the synchronous replica kept trying to restore a Write-Ahead Log (WAL) file through the

restore_command. On each attempt, it failed with a message stating that the file was invalid (more on this later).

A lot to unpick, right?

Given the complexity involved, it was clear that we'd only get to an answer in a reasonable amount of time if we could repeatedly break a cluster in slightly different ways and see if we could get it to break in the same way our production cluster did on 10 October.

Fortunately, as part of some unrelated work we'd done recently, we had a version of the cluster that we could run inside Docker containers. We used it to help us build a script that mimicked the failures we saw in production. Being able to rapidly turn clusters up and down let us iterate on that script quickly, until we found a combination of events that broke the cluster in just the right way.

A red herring: the invalid WAL file

One of the log entries that stood out, and was a real cause of concern for a while, was the synchronous replica failing to restore a WAL file through its restore_command.

A quick bit of background for those not familiar with Postgres: the Write-Ahead Log is how Postgres records everything you ask it to write (e.g. INSERT, UPDATE, DELETE queries). This log provides strong guarantees of those writes not being lost if Postgres crashes, and is also used to keep replicas in sync with the primary.

There are two ways the WAL can be used: through streaming replication, and through archive_command and restore_command.

In streaming replication, replicas establish an ongoing connection to the primary, which sends them any WAL it generates. If you specify that the replication should be synchronous, it waits for the replica to confirm that it's received the WAL before returning from the query that generated it.

With archive_command, Postgres lets you specify a shell command that will be executed every time a chunk of WAL is generated on the primary. It makes the file name available to the command you specify, so that you can choose what to do with the file. Similarly, restore_command runs on replicas, and passes you the name of the next WAL file the database expects to replay, so you can copy it from wherever you archived it to.

It's common to use both streaming replication and archive_command in combination. Streaming replication keeps your replicas in sync with the most recent changes, and archive_command/restore_command can be used to bootstrap nodes that are further behind by pulling in older WAL files from an archive external to the cluster (e.g. when you want to bootstrap a new node by restoring your last full backup then replaying WAL).

So, what happened during the incident? It turned out that as a final act, the server with the RAID controller issue archived an invalid WAL file to our backup server. When the Postgres subprocess crash caused a restart on the synchronous replica, that Postgres instance came back up and ran its restore_command, pulling in the invalid WAL.

Postgres's internal validation checks saw that the WAL file was invalid, and discarded it. In terms of the data, that didn't matter! That node already had a good copy of those writes, as it was doing streaming synchronous replication from the primary at the time of the failure.

We matched the log line from the validation failure against the Postgres source code, and spent a lot of time reproducing the exact same type of invalid WAL in our local container setup. Keep in mind that it's a binary format, and the contents are fairly dynamic - not the easiest thing to break in a repeatable way!

In the end, we figured out that it played no part in the Pacemaker cluster's inability to promote a new primary. At least we'd learned a little more about the internals of Postgres!

So then, what was it?

Through a process of elimination, we were able to remove steps from the script until we were left with three conditions that were necessary for the cluster to break:

Pacemaker setting:

default-resource-stickinessBy default, Pacemaker doesn't assign a penalty to moving resources (such as a Postgres database process, or a VIP) to different machines. For services like Postgres, where moving a resource (e.g. the VIP that the clients are connected to) causes disruption, this isn't the behaviour we want. To combat this, we set the

default-resource-stickinessparameter to a non-zero value, so that Pacemaker will consider other options before moving a resource that is already running.Pacemaker resource: Backup VIP

As part of another piece of work to reduce load on the primary node, we'd added another VIP to the cluster. The idea was that this VIP would never be located on the primary, so the backup process would always connect to a replica, freeing up capacity for read operations on the primary. We set a constraint on this VIP so that it would never run on the same server as the Postgres primary. In Pacemaker terms, we set up a colocation rule with a

-INF(negative infinity) preference to locate the Backup VIP and the Postgres primary on the same server.At the time of the incident, the Backup VIP was running on the synchronous replica - the node that Pacemaker should have promoted to primary.

Failure condition: two processes crashing at once

Even with the configuration above, crashing the Postgres process on the primary wasn't enough to reproduce our production incident. The only way to get the cluster into a state where it would never elect a new primary was to crash one of Postgres' subprocesses on the synchronous replica, which we saw in the production logs from the incident.

All three of these conditions were necessary to reproduce the failure. Removing the default-resource-stickiness or the Backup VIP led to the cluster successfully promoting a new primary, even with the two processes crashing almost simultaneously. Similarly, crashing only the Postgres process on the primary led to the cluster successfully promoting the synchronous replica.

We spent some time testing different changes to our Pacemaker configuration, and ran into a surprising fix. Somehow, the -INF colocation rule between the Backup VIP and the Postgres primary was interfering with the promotion process, even though there was another node - the asynchronous replica - where the Backup VIP could run.

It turned out that specifying the colocation rule for the Backup VIP the opposite way round worked just fine. Instead of specifying a rule with a -INFpreference between the Backup VIP and the Postgres primary, we could specify an INF preference between the Backup VIP and a replica. When specified that way round, the cluster promotes the synchronous replica just fine under the same failure conditions.

Moving to a new database cluster

Whilst this investigation was going on, the other half of the team were figuring out how to migrate from our manually managed cluster to a new cluster managed once again by Pacemaker.

Fortunately for us, we had some prior work we could turn to here. We've previously spoken about our approach to performing zero-downtime failover within a cluster. The script that coordinates that is publicly available in this GitHub repository.

The talk goes into more detail, but the relevant part isn't too hard to describe.

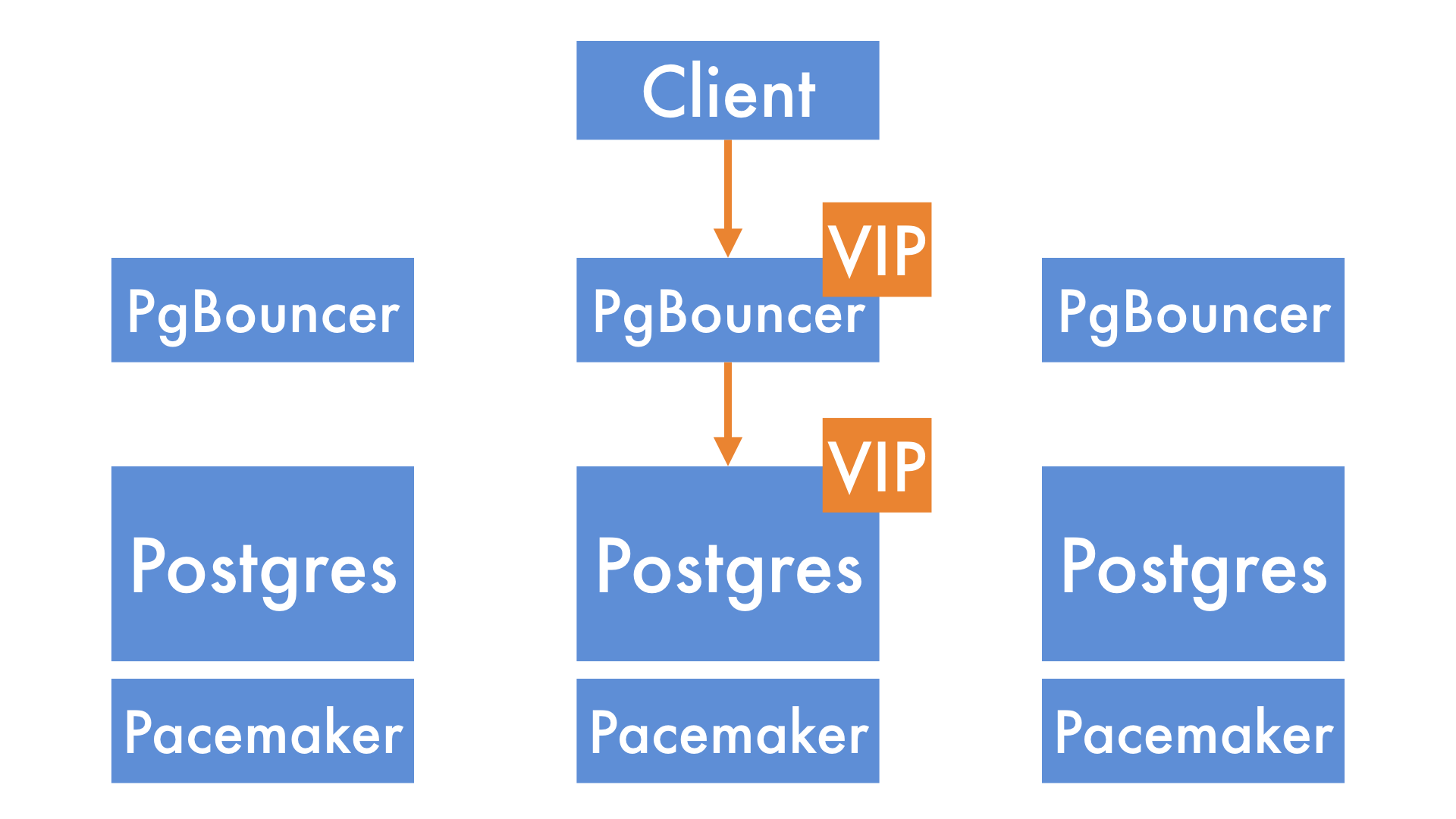

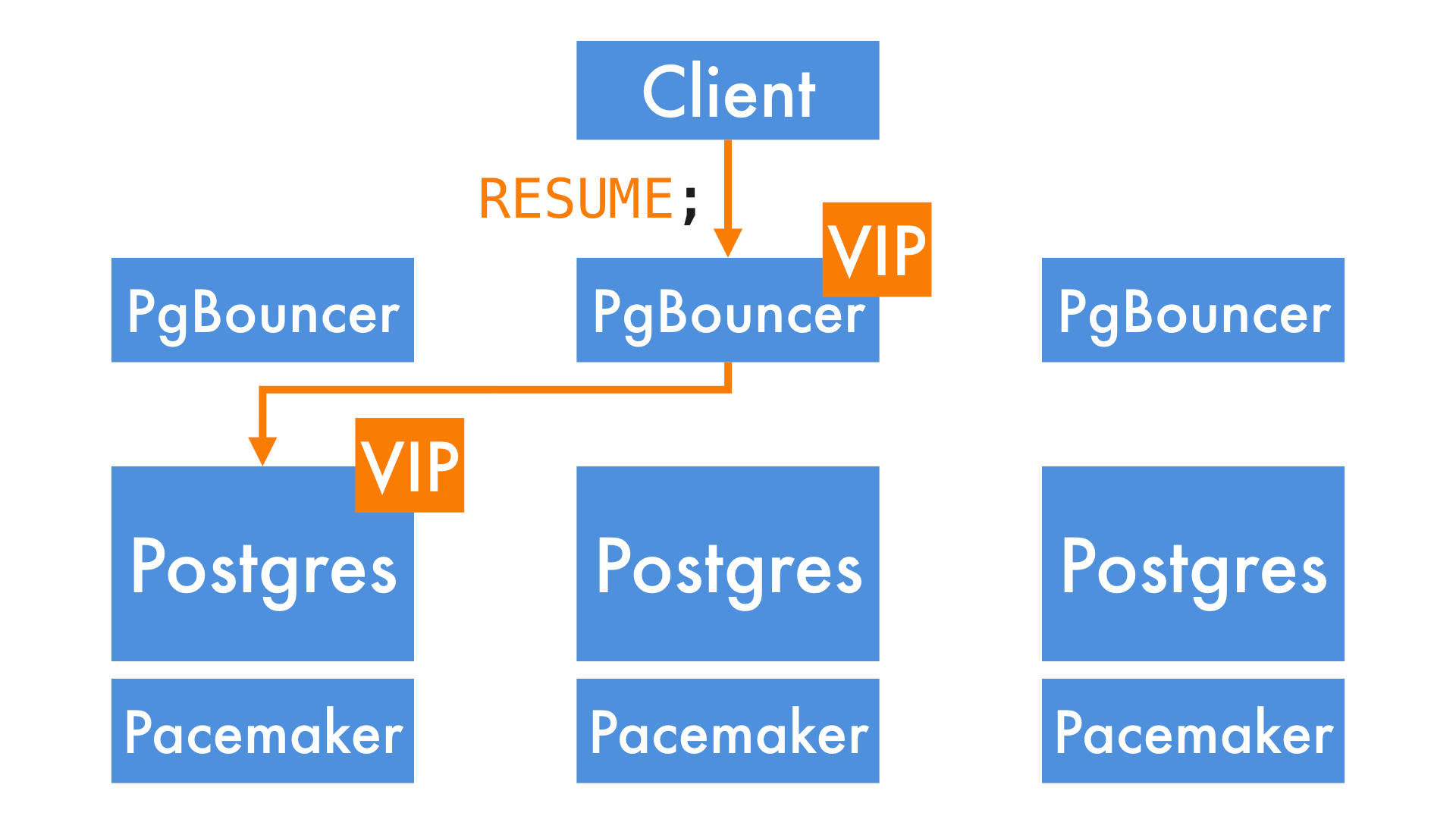

As well as Postgres and Pacemaker, we also run a copy of PgBouncer on each of the nodes in our database cluster. We introduce a second VIP as a layer of indirection. Clients (e.g. our Ruby on Rails applications) connect to this new PgBouncer VIP. PgBouncer, in turn, connects to the original Postgres VIP.

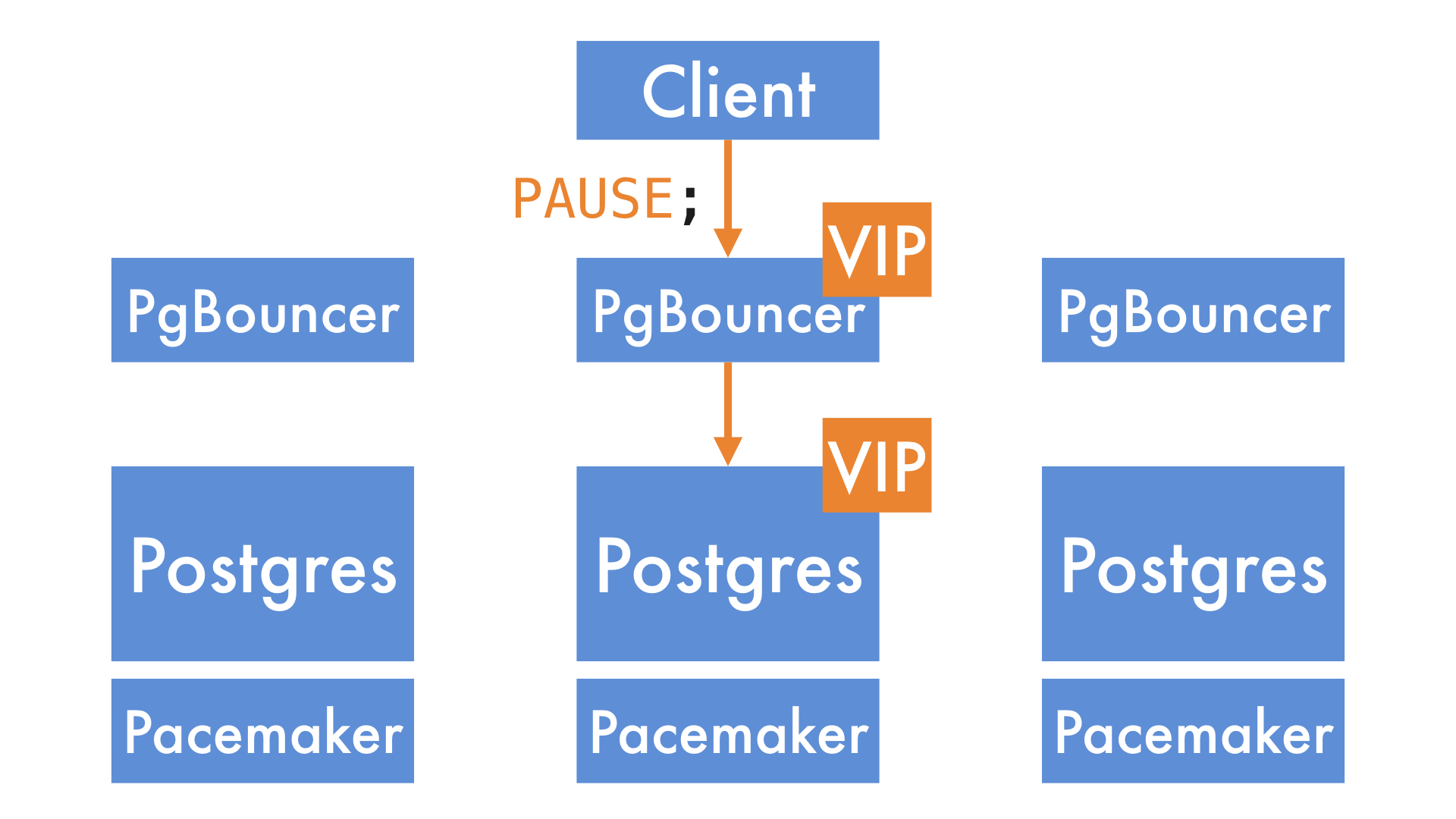

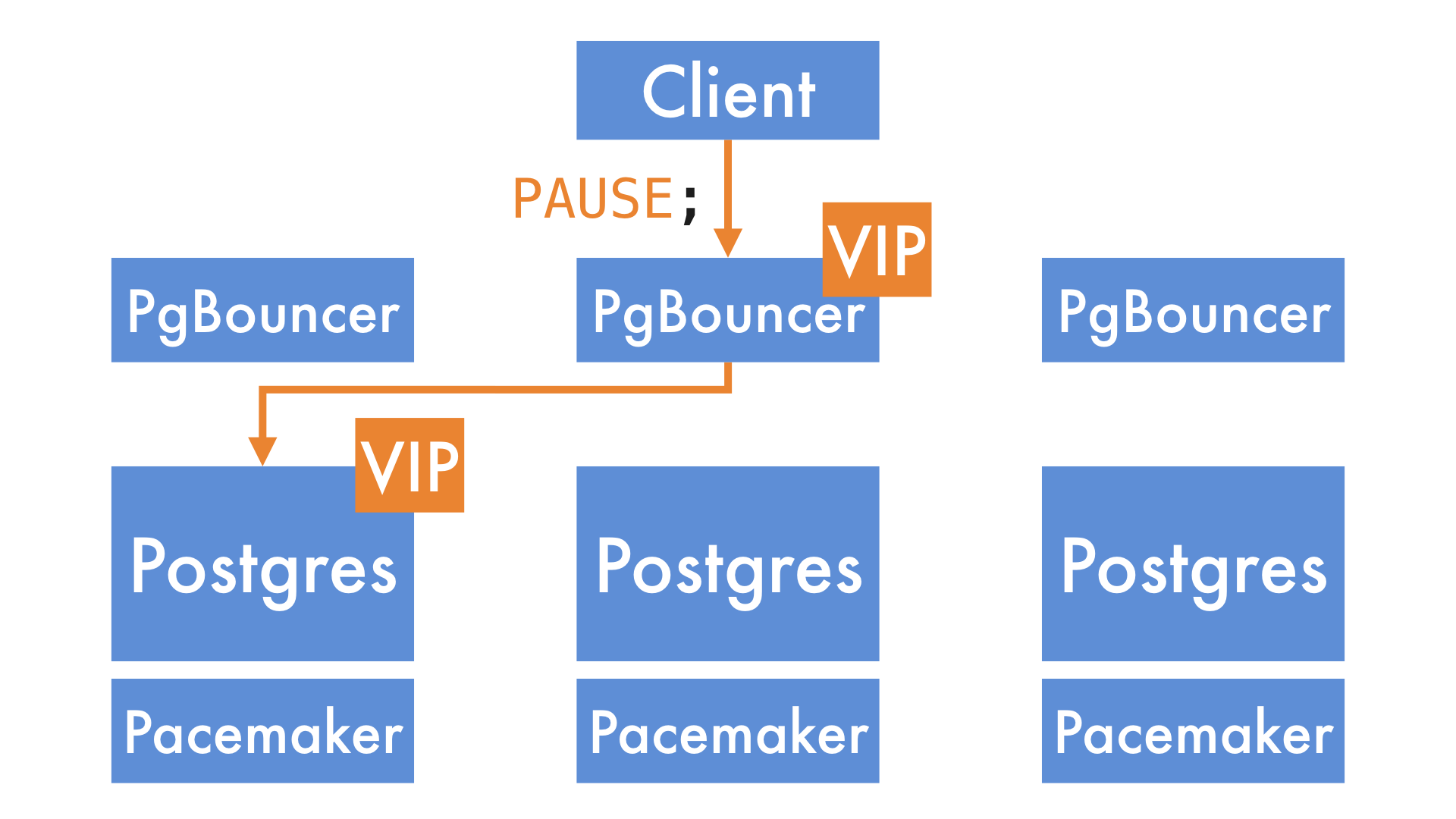

It's possible to pause all incoming queries at PgBouncer. When you do that, it puts them into a queue.

We can then promote a new primary and move the Postgres VIP to it. Note that the VIP the clients are connecting to - the PgBouncer VIP - doesn't need to move, so the clients experience no disruption.

Once the cluster has finished promoting the new node, we tell PgBouncer to resume traffic, and it sends the queued queries to the new primary.

We needed to adapt this procedure a little. The automation that performs it is designed to migrate between different nodes in the same cluster, not two separate clusters.

The subteam responsible for getting us into a new cluster spent the next couple of weeks making those adjustments and performing practice runs. Once they were totally comfortable, we put together a plan to do it in production.

Wrapping up the incident

With a plan in place, and the confidence that we'd understood and fixed the issue which stopped our cluster from failing over on 10 October, we were ready to go. Even with all the testing we'd done, we announced a maintenance window as a precaution.

Fortunately, everything went as planned on the night, and we migrated to our new database cluster without a hitch.

We decommissioned the old cluster, and closed the incident.

What's next?

There's no getting away from the size of this incident.

We feel an immense duty to everyone who trusts GoCardless as their payments provider. We took some time to think through what we'd learned from this incident, with a focus on how we could improve our reliability in the future. Some of the key items we came up with were:

Seemingly simple Pacemaker configuration can lead to extremely unusual behaviour

On the surface, defining a rule that says two resources must not run together seems like it would be the opposite of defining a rule that says two resources must run together. In reality, they cause the system to behave in entirely different ways in certain failure conditions. We can take this knowledge into any future work we do with Pacemaker.

Unrelatedly, but conveniently, we're about to move away from using VIPs to direct traffic to specific Postgres instances. Instead we'll be running proxies on the application servers that direct traffic to the right node based on the state of the cluster. This will drastically reduce the number of resources managed by Pacemaker, in turn reducing the potential for weird behaviour in the cluster.

Some bugs will only be surfaced through fault injection

A misconfiguration that only surfaces when two processes crash at almost the same time isn't one that you're going to find through basic tests or day-to-day operations. We've done some fault injection as part of our game day exercises, but there's always more you can do in that area. Harsher tests of the Postgres cluster, and automation like Chaos Monkeythat continually injects failure are both ideas we're keen to pursue.

Automation erodes knowledge

It turns out that when your automation successfully handles failures for two years, your skills in manually controlling the infrastructure below it atrophy. There's no "one size fits all" here. It's easy to say "just write a runbook", but if multiple years go by before you next need it, it's almost guaranteed to be out-of-date.

There are definitely ways to combat this. One possibility we're thinking of is adding arbitrary restrictions to some of our game day exercises (e.g. "this cluster is down, the automation has failed, you can't diagnose the problem, and need to bring the service back another way").

The elephant in the room

We're sure some of you are asking why we even run our own Postgres instances when there are hosted options out there. We couldn't end this write-up without talking about that a little.

We do periodically consider the options out there for managed Postgres services. Until recently, they were somewhat lacking in a few areas we care about. Without turning this article into a provider comparison, it's only a recent development for any provider to offer zero-downtime patch upgrades of Postgres, which is something we've been doing for a while.

The other thing that's made us rule out managed Postgres services so far is that most of our our infrastructure is in a bare-metal hosting provider. The added latency between that provider's datacentres and a hosted Postgres service would cause some fairly drastic re-work for our application developers, who can currently assume a latency to Postgres of a millisecond or lower.

Of course, nothing is set in stone. Our hosting situation can change over time, and so can the offerings of the various hosting providers out there. We'll keep our eyes on it, and perhaps one day wave goodbye to running Postgres clusters ourselves.

Closing thoughts

We'd like to apologise one last time for this incident. We know how much trust people put in their payment providers, and we strive to run a reliable service that reinforces that trust.

At the same time, we strongly believe in learning from failure when it does happen. It's encouraging to see that blameless post-mortems are becoming increasingly common in operations disciplines (whether you happen to call that DevOps, SRE, or something else). We hope you've found this one interesting and useful.

You can find us at @GoCardlessEng on Twitter if you've got comments or questions. We'll try to answer them as best we can!

Records of payments, merchant and customer details, etc ↩

In Postgres' standard replication setup, only the primary node can accept writes. ↩

At this point we weren't at all comfortable with the cluster automation, and we knew we would have to spend time digging into what went wrong. The team recognised that this was going to be a detailed investigation with a lot of moving parts, and could take multiple days - potentially a week or two. It was essential that we were comfortable in the safe running of GoCardless' services while we did that. ↩